The Protocol Graveyard

In the summer of 1994 during my high school days, my family rented a house for a week in Ocean City New Jersey on Bay Avenue. In preparation for the vacation, I had checked out a book from the Camden County library on this new thing called the Internet. I had not actually used the internet yet (or even online services like CompuServ and Prodigy), but I was very interested in computers and it seemed like an interesting book, so I decided to check it out for some light beach reading.

The book described a wide variety of great protocols and applications available at the time. Most of these are not used anymore. The book contained a single chapter on something called the World Wide Web. It was an interesting chapter no doubt, but it was not clear at the time that it would become our future existence and most of the other protocols and applications would be all but dead in the next two decades.

This article will recall some of the great protocols of the internet past.

Quick links:

Gopher

Gopher [1] was the protocol behind a distributed document retrieval system of the early 1990s. You would point your Gopher client to a sever and get a list of menu links. Each of those links could take you to another menu or a file, and those could be on a different server. This might sound familiar. Gopher was very much a forerunner to the World Wide Web.

Gopher was a major hit when it came out in 1991, released by a team of software developers at the University of Minnesota. In a matter of months universities, government agencies, and tech companies were standing up Gopher servers. By 1994, there were nearly 7,000 Gopher sites.

In 1993, management at University of Minnesota choose to start charging licensing fees for its server software [2]. This greatly quelled enthusiasm for Gopher on the internet. Around the same time, the small team working on Gopher was redirected to work on other internal projects. Then the World Wide Web started really taking off. The web was a similar system of linked documents retrievable over the internet but was more flexible and and had better support for images and graphics. By the mid 1990s, the World Wide Web had eclipsed gopher and there was no going back.

Not Dead Yet: Some people have kept the flame for Gopher alive into 2025. It is quite easy to access the remnants of gopherspace from a Linux computer today. Just install lynx, a text-based browser with good Gopher protocol support. Then run:

$ lynx gopher://gopher.floodgap.com

There you can find links other Gopher servers and the Veronica-2 gopherspace search engine.

You can also install the 1990s gopher client from University of Minnesota with on Debian GNU/Linux with:

$ sudo apt install gopher

$ gopher gopher://gopher.floodgap.com

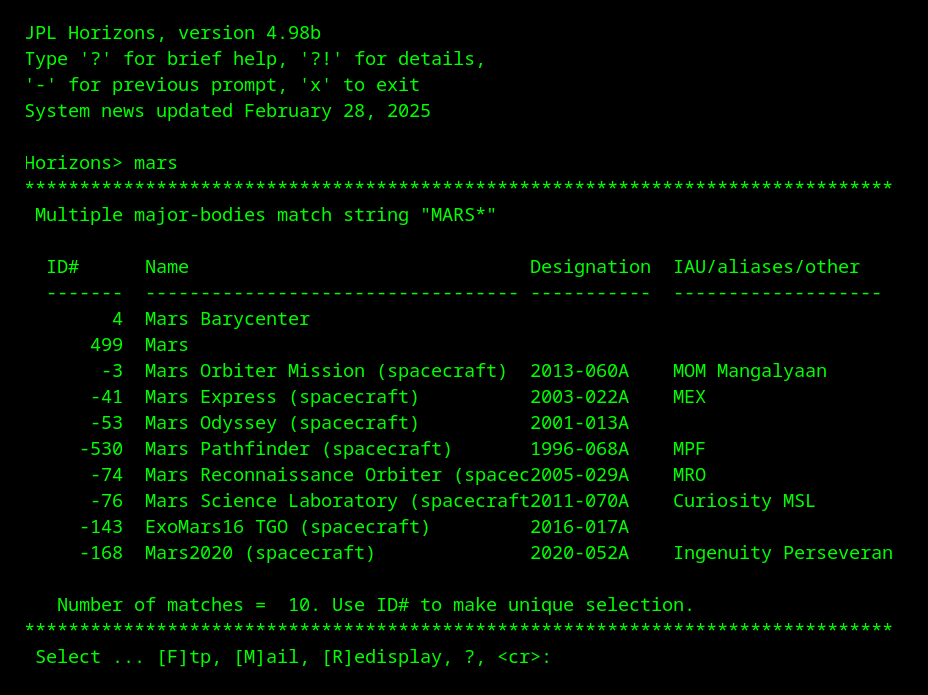

And don’t forget to change your terminal colors to green-on-black for the full retro experience!

Telnet

Telnet [3] was once the standard protocol for remotely logging into a host on the internet via a text-based interface. Telnet could be used to access servers using a personal account or to access public facing applications such as library catalogs and multi-user dungeons (MUD).

Telnet’s fatal flaw was its lack of encryption. Passwords were transmitted in clear text and could easily be lifted by an eavesdropper on the network running their network interface card in promiscuous mode. Telnet has been superseded by SSH for text-based remote host access, and most public facing applications have been moved to the web.

Not Dead Yet: Telnet clients are readily installable for Linux and other UNIX-like operating systems. MUDs are generally still available, for example:

$ telnet dunemud.net 6789

$ telnet legendsofthejedi.com 5656

There is also a telnet server available for the nethack game:

$ telnet nethack.alt.org

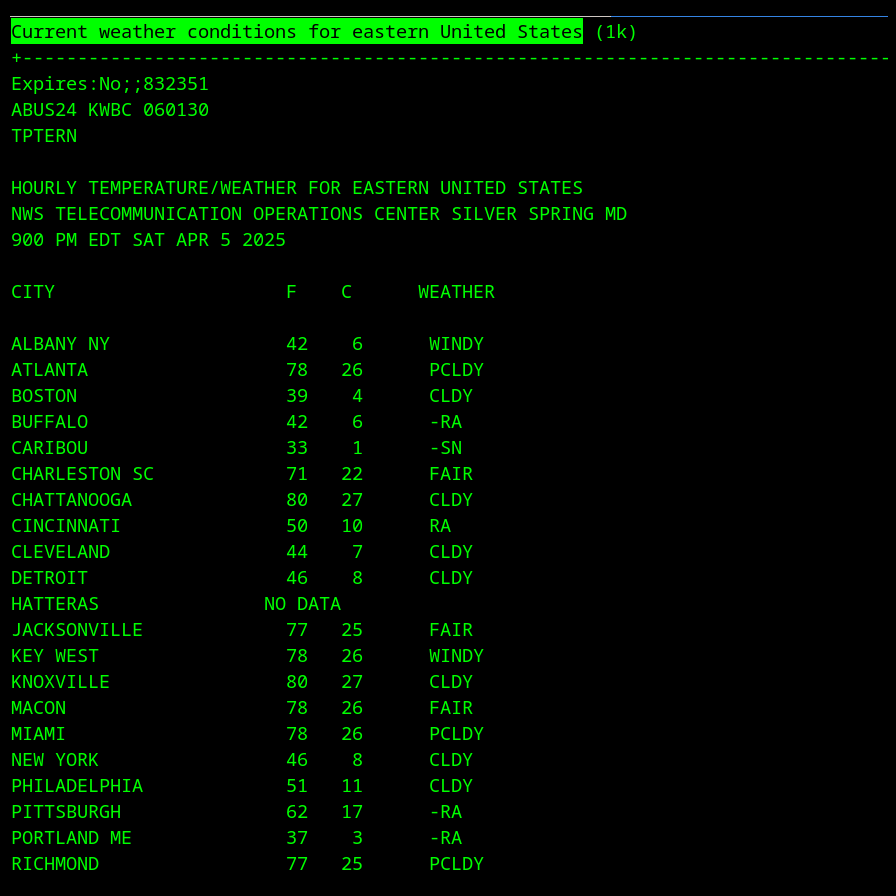

The one non-game application I was able to find for this article was the Horizons System by NASA JPL which provides the ability generate custom ephemeris datasets for planets, satellites, asteroids, etc.

$ telnet horizons.jpl.nasa.gov 6775

A web version has also been available for a long time, but the telnet version is still available in 2025.

Finger

Finger [4][5] was a protocol that let you get information about a user on a particular server. You could get things like their current online status, the last time they logged in, and whether they had unread mail. Users could write a .plan file in their home directory which would be returned as part of the finger response letting that user customize their finger information however they wanted. You could even get a list of all users on a particular system and their current statuses. The protocol had no method of authentication or authorization. The information returned over the finger protocol was completely public.

Finger was primarily used during a time of innocence on the internet. People were focused on the positive possibilities of information availability and gave little thought to spammers or scammers. But as the internet grew in users, so did its history of security incidents. System administrators wisely stopped running the finger daemon or limited access behind a firewall. Finger gradually evolved from a system providing information about all users to one providing only deliberately published information. Some high profile developers such as John Carmack and John Romero of idSoftware used the finger .plan file during the 90s to post informal writings, serving as a kind of precursor to blogging and microblogging.

One of the amazing things about the finger protocol is its simplicity. The client simply makes a TCP/IP connection and sends a simple command ending in a CRLF and the server responds with whatever ASCII text it wants to return in any format it wants. The command itself is actually optional. Send just a CRLF to request information on all users. RFC 742 and the later more elaborate RFC 1288 are worth a casual read just for fun.

Ultimately, a protocol providing information about users with no authentication whatsoever is a gift to hackers who want to do social engineering. Operating systems began disabling fingerd by default and system administrators were more than happy to keep it that way.

Not Dead Yet: It is still very common for a finger client to be installed by default on UNIX-like systems. A small fingerverse still exists on the internet today in 2025. Try getting into the fingerverse with the following commands:

$ finger ring@thebackupbox.net

FTP

The File Transfer Protocol (FTP) [6] was once the dominant way to download files from the internet. Source code, binaries, datasets, books and articles were all available on public FTP sites. Usually users would log on to an FTP server using a username of “anonymous” and were sometime asked to use their email address as the password which was generally ignored. FTP was also used for the transfer of private files between hosts. Individuals could upload file from their local host to the home directory on a remote host for their own personal use.

FTP was an unique protocol in that it used two TCP/IP connections‒one for commands and another for data. This maintained a clean separation of concerns between these two types of transmissions but also meant that a second dynamically determined port had to be used. This caused headaches for network security engineers setting up a firewall. If they made the port range too small, the randomness of dynamic port determination would be less secure. And making the port range too large defeats the purpose of having a firewall in the first place.

Like all the early internet protocols, all data was transmitted in cleartext. This includes user names, passwords and the contents of private files. Even in the case of a public FTP site, you needed an authorized person to be able to upload and manage the contents of the server. The credentials of such users had to be transmitted in the clear. And once a file was on the FTP site, it was generally assumed to be legitimately published by the site and not a hacker. There were few options available for file verification‒usually just simple file hashes or checksums, and even those were not routinely performed.

Ultimately, for these security concerns and others, FTP gave way to HTTP and eventually HTTPS as the dominant ways to download public files from the internet. HTTPS involves a single port making firewalls configuration a breeze and key verification using well-established trust hierarchy that the user is generally not even aware of unless there is an issue. SFTP, which is file transfer over the Secure Shell (SSH) protocol, has become the dominant way of transferring private files, with all file content and credentials fully encrypted.

Not Dead Yet: Anonymous FTP servers can still be found on the internet today. Back in the heyday of anonymous FTP, ftp.funet.fi was one of the most popular anonymous FTP sites and it is still up available today and even updated with new content. File timestamps range from the 1980s up until 2025. It mostly offers free and open source software, but other types files such as academic papers and sports statistics can also be found.

IRC

Internet Relay Chat (IRC) [7] was once a thriving and bustling area of the internet. IRC was the first widely popular online real-time chat system, having supplanted earlier more limited systems such as the UNIX talk command.

IRC allowed multiple chat servers across the globe to connect and form an IRC network. Users could connect to their closest regional server which was in turn connected to all other servers on that IRC network thereby enabling global real-time communication.

IRC could be quite a fun and addictive place to hang out. Channels such as #funfactory often had multiple conversation threads going at once. People implemented bots for trivia games and joke telling. IRC was also a great place to ask questions and get help with various technical topics especially programming languages and operating systems.

The IRC protocol itself had no provision for authentication. Nicknames were not owned by a particular user in the protocol itself. Bots had to be implemented to enforce additional policies such as nickname registration and protection with a password. While IRC went strong well into the 21st century, it eventually gave way to instant messaging clients such as AOL Instant Messager and eventually more feature-rich chat applications such as Slack and Discord.

Not Dead Yet: Some IRC networks are still up and operational such as DALnet and Libera.Chat. There are still good IRC clients available for use such as HexChat for Linux and mIRC for Windows which has been around since 1995 and is still being updated.

NNTP

The Network News Transfer Protocol (NNTP) [8][9] was the protocol behind the Usenet system of online newsgroups. Usenet was a decentralized “news” system where users could post messages to topics of interest and download postings from a topic of interest. It was like a email group mailing list except more efficient and globally scalable. It included both moderated and unmoderated newsgroups.

Some humble posts like Linus Torvalds announcement of his hobby OS project [ 10 ] and Tim Berners-Lee’s World Wide Web project for making academic information available to anyone on the internet [ 11 ] were made on Usenet and later became legendary.

Usenet was also used extensively for file sharing. Although, NNTP was inherently a text-based protocol, files were shared using text-encoding schemes such as uuencode or base64. Files shared on Usenet were of both the legal and illegal type. In the mid to late 2000s, ISP started reducing or eliminating their Usenet offerings and it began its decline. Some also speculate that pressure from politicians such as New York Attorney General Andrew Cuomo cracking down on child pornography on the internet may have played a role as well.

Right around the time that ISPs started curtailing their Usenet offerings, modern social media apps like Reddit, Facebook, and Twitter began to take off. File sharing moved to peer-to-peer systems such as BitTorrent. Usenet lived on for a quite while longer with Google Groups which offered public Usenet access via the web along side its own discussion group system. But in 2024 Google Groups stopped accepting new posts and now serves as a public Usenet archive.

Not Dead Yet: Starting at about $10 a month and up, Usenet is still alive with commercial services such as Newshosting and Easynews. I have not ponied up the money to find out what content is out there in Usenet today.

PPP and SLIP

The PPP (Point-to-Point Protocol) [ 12 ] and its older less advanced sibling SLIP (Serial Line Internet Protocol) [ 13 ] enabled widespread household Internet access with a modem and dial-up networking. PPP worked by wrapping and embedding IP packets into the byte stream of a modem connection. These protocols provided a significant innovation over earlier methods of accessing the Internet with a PC. The earlier methods, which used tools like Minicom and Seyon, essentially gave you a remote terminal on an Internet-connected host and limited the user’s experience to text-based terminal applications. PPP and SLIP made your computer a host on the network itself. This greatly improved the user experience of getting on the Internet, made downloading files easier, and, most importantly, it enabled graphical web browsing. Millions of people accessed the Internet for the first time from a PC in their home using PPP and SLIP.

Not Dead Yet: The Linux kernel still has network device driver module for PPP and SLIP that are built by default. GNU/Linux distros continue to maintain a ppp package that includes additional tools such as pppd and chat to establish a network connection using PPP. Most amazingly, there are still ISPs in the US, such as NetZero, offering dial-up Internet in 2025.

Conclusions

In reviewing these old bygone protocols, the themes of security, multimedia, and generality emerge clearly.

The early internet did not employ much in the way of security. Encryption was once considered a military technology and not something needed for public information sharing. The world needed a few decades of Moore’s law before computers were powerful and affordable enough to make encryption routine. The desire to execute commercial transactions on the internet necessitated a new view of encryption. When this switch came by the mid to late 1990s, protocols needed to either adapt or face extinction.

Computers were originally designed to work with numbers. Support for text was later added. Computer graphics and audio were in an early stage of research and development when the internet began development. So the first generation of internet protocols and applications were text-based. As the two lines of development matured, it became obvious that the internet should be combined with multimedia technologies. The web browser was the best platform available to bring together text, graphics, and audio in a coherent user experience. Other protocols and applications could at best simply download multimedia files for separate consumption.

One of the most powerful ideas of the world wide web is that documents need not be limited to text. With the advent of JavaScript and its combination in the browser with HTML and CSS, the world gained a platform for distributing general-purpose applications and the old text-based protocols began dying off. Today we mourn and remember them with reverence as we move onward into the future of the internet.

References

- Anklesaria, F., McCahill, M., Lindner, P., Johnson, D., Torrey, D., and B. Alberti, "The Internet Gopher Protocol (a distributed document search and retrieval protocol)", RFC 1436, DOI 10.17487/RFC1436, March 1993, https://www.rfc-editor.org/info/rfc1436.

- Williams, M. (1995, Aug 6). The Gopher Navigator Finds a Niche in the Web World Order The Washington Post. https://www.washingtonpost.com/archive/business/1995/08/07/the-gopher-navigator-finds-a-niche-in-the-web-world-order/c0c7c0e4-0e64-4f57-ac8f-7bb295a893ba/

- Postel, J. and J. Reynolds, "Telnet Protocol Specification", STD 8, RFC 854, DOI 10.17487/RFC0854, May 1983, https://www.rfc-editor.org/info/rfc854.

- Harrenstien, K., "NAME/FINGER Protocol", RFC 742, DOI 10.17487/RFC0742, December 1977, https://www.rfc-editor.org/info/rfc742.

- Zimmerman, D., "The Finger User Information Protocol", RFC 1288, DOI 10.17487/RFC1288, December 1991, https://www.rfc-editor.org/info/rfc1288.

- Postel, J. and J. Reynolds, "File Transfer Protocol", STD 9, RFC 959, DOI 10.17487/RFC0959, October 1985, https://www.rfc-editor.org/info/rfc959.

- Oikarinen, J. and D. Reed, "Internet Relay Chat Protocol", RFC 1459, DOI 10.17487/RFC1459, May 1993, https://www.rfc-editor.org/info/rfc1459.

- Kantor, B. and P. Lapsley, "Network News Transfer Protocol", RFC 977, DOI 10.17487/RFC0977, February 1986, "https://www.rfc-editor.org/info/rfc977".

- Feather, C., "Network News Transfer Protocol (NNTP)", RFC 3977, DOI 10.17487/RFC3977, October 2006, https://www.rfc-editor.org/info/rfc3977.

- Torvalds, L.B. (1995, Aug 25), What would you like to see most in minix?, [Usenet post]: comp.os.minix. Archived at: https://lwn.net/2001/0823/a/lt-announcement.php3

- Berners-Lee, T. (1991, Aug 6), Re: Qualifiers on Hypertext links..., [Usenet post]: alt.hypertext. Archived at: https://www.w3.org/People/Berners-Lee/1991/08/art-6484.txt

- Simpson, W., Ed., "The Point-to-Point Protocol (PPP)", STD 51, RFC 1661, DOI 10.17487/RFC1661, July 1994, https://www.rfc-editor.org/info/rfc1661.

- Romkey, J., "Nonstandard for transmission of IP datagrams over serial lines: SLIP", STD 47, RFC 1055, DOI 10.17487/RFC1055, June 1988, https://www.rfc-editor.org/info/rfc1055.

Acknowledgements

ChatGPT was used in the preparation of this article for light proofreading and stylistic suggestions.